If you selfhost any applications and have wondered how you can best access those applications from outside your network, you’ve undoubtedly come across Cloudflare. Cloudflare offers two services in particular that might be attractive to homelabbers and selfhosters, reverse proxying and “tunnels”.

Both of these offer some degree of benefit; proxying potentially offers a degree of Denial of Service (DoS) 1 protection and use of their Web Application Firewall (WAF), and the tunnel additionally adds the benefit of circumventing the host’s firewall and port-forwarding rules. To sweeten the deal, both of these services are offered “for free”, with the proxying actually being the default option on their free plans.

Lets examine these benefits briefly and see why they’re as popular as they are.

Advantages

DoS Protection

By virtue of being such a massive network, Cloudflare’s proxies is able to absorb a massive number of requests and block those requests from reaching their customer. You can think of it as a dam, when a massive flood of requests come in, Cloudflare is in front of the customer holding back the waters.

WAF

Cloudflare’s WAF allows customers to set firewall rules on the proxy itself, which can block or limit outside requests from ever reaching the customer, similar to the DoS protection.

Bypassing Firewalls (tunnel)

Many firewalls are configured to allow outgoing connections and block incoming connection. This is great for security, since it prevents others from accessing your stuff from the public internet, but this can be a problem if you want people to access your website or services. Cloudflare’s tunnel service takes advantage of the “allow outgoing” rule to establish a connection from the host server to Cloudflare, and then allowing incoming requests to tunnel through this connection and access the host server.

Bypassing Port-Fowarding rules (tunnel)

If you’re hosting a service from behind an IPv4 address, there’s a good chance that you’re using a technology called Network Address Translation (NAT) 2. This allows you to have multiple systems running behind a single public IP address. This concept can be taken further by Carrier Grade (CG) NAT, where the ISP applies NAT rules to their customers. The problem with NAT is that it becomes difficult to make an incoming request to a machine behind NAT. To an outside system, all of the machines behind the NAT layer appears to have a single address. To partially overcome this, a rule needs to be configured that says “when a request comes in for port X, forward it to private address Y, port Z.” This allows, in a limited way, an outside connection to make use of the NAT address and a particular port to send traffic to a specific machine behind the NAT layer. In the case of CGNAT however, these rules would need to be created by the ISP, a service which most do not offer. Similar to taking advantage of the outgoing firewall rules, a tunnel also uses outgoing ports to avoid the need to create specific port forwarding rules and then directly tunnels a list of ports straight to the host machine.

On the surface, this all sounds like a great product; it allows smaller customers to selfhost even if in a sub-optimal environment.

Considerations

Cloudflare’s proxy and tunnel models both have several aspects, however, which might make some want to reconsider their use.

DoS Protection

DoS attacks can vary in size and scope and their impact will depend not only on the volume of the attack, but also on the capabilities of the targeted system. For example, for a small home server, a few hundred connections per second might be enough cause a denial of service. On the extreme opposite, Cloudflare’s servers routinely handle an average of 100,000,000 requests per second without issue. With those numbers in mind, a DoS on a small home server might be so small that it goes unnoticed by Cloudflare’s protections. I do have to say “might” here, though, because I could not find a definitive answer to how Cloudflare determines what a DoS attack looks like. I would expect, however, that the scale of a home server would be so small compared to the majority of Cloudflare’s customer base that an effective DoS attack would not look significantly different than “normal” traffic.

Lastly, and quite importantly, this protection only exists for attacks targeted at a domain name. Domains exist to make the internet easier for humans, for a computer script or bot, an IP address is far simpler. If an attacker targets the hosts IP address directly, that attack will completely bypass whatever protection Cloudflare was providing. If you’re behind CGNAT, this means the attack will target the ISP, but if you have a publicly addressable IP address, that attack is directly targeting your home router.

Security

Cloudflare offers these services for free, and as the saying goes: “If you are not paying for it, you’re not the customer; you’re the product.”

Cloudflare advertises that their proxy and tunnel services can optionally provide “end-to-end” (e2e) encryption. In the traditional and commonly used definition, this means that traffic is encrypted by the client (your or your users) and decrypted by the host (your server). Under the normal definition, no intermediary device can decrypt and read the traffic.

Cloudflare, however, uses the term a little differently, as you can see in this graphic.

Instead of providing traditional e2e, Cloudflare acts as a “Man in the Middle” (MITM), receiving the traffic, decrypting it, analyzing it, and then re-encrypting it before sending it along. Cloudflare does this in order to provide their services; they collect and store the unencrypted data in order to apply WAF rules, analyze patterns, and so on.

Now, Cloudflare is a giant company with billions in contracts; contracts they could potentially lose if they were found to be misusing customer data. They wouldn’t benefit by leaking your nude photos from your NextCloud instance or exposing your password for Home Assistant, but you should understand that by giving them the keys to your data, you are placing your trust in them. This MITM position also means that, theoretically, Cloudflare could alter your content without the you (or your users) knowing about it. Normally this would cause a modern browser to display a very large “SOMEONE MIGHT BE TRYING TO STEAL YOUR DATA” warning, but because you are specifically allowing Cloudflare to intercept your data, the browser has no way of confirming whether the content actually came from your server or Cloudflare themselves.

Cloudflare does have a privacy policy, which explains exactly how Cloudflare intends to use your data, and a transparency report, which is intended to show exactly how many times each year Cloudflare has provided customer data to Government entities.

The warning you would normally get during a MITM attack:

Content Limitations

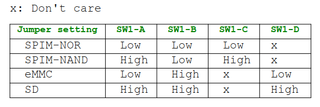

Lastly, Cloudflare, by default, only proxies or tunnels certain ports. If you want to forward an unusual port through Cloudflare, like 70 or 125, you would need to use a paid account or install additional software. Cloudflare's Terms of Service also limit the type of data you can serve through their free proxies and tunnels, such as prohibiting the streaming of video content on their free plans.

Alternatives

Are there other ways to get similar benefits?

- If only a limited number of users need access to your server or network and you have a public IP address, the best solution by far is to use a VPN service, such as Wireguard. Wireguard allows you to securely access your network without exposing any attack surface for bots or malicious actors.

- If you don’t have a public IP, there’s a service called “Tailscale” which uses the same outgoing-connection trick to bypass firewalls and CGNAT, but instead of acting as a MITM privy to all of your traffic, Tailscale simply coordinates the initial connection and then allows the the client and host to establish their own secure, encrypted connection.

- If you do want to expose a service to the world (like a public website) and you have a public IP address, you can simply forward the needed port/s from your router to the server (typically 443 and possibly 80).

- If you want your service to be exposed to the world , but are stuck behind CGNAT, then a Virtual Private Server (VPS) is a potential option. These typically have a small cost, but they provide you with a remote server that can act as a public gateway to your main server. They can also provide a degree of DoS protection, since they’re using a much larger network and you can simply turn off the connection between the VPS and your server until things calm down.

Closing thoughts

Cloudflare offers some great benefits, but if you're particularly security-minded, you may want to look into alternatives. Even though Cloudflare is trusted by numerous customers around the world, you still have to decide if you want to trust them with your data. While there are other alternatives, though, Cloudflare's offerings are mostly free and comparatively easy to use.

1. DoS or a "Denial-of-Service (DoS) Attack" is a cyber act in which an attacker floods a server with internet traffic to prevent legitamte users from accessing the services. Cloudflare offers an example of one type of DoS attack here.

- NAT translates private IP addresses in an internal network to a public IP address before packets are sent to an external network.